Coming from someone who has written it before.

Maybe you have seen stories like this floating around:

Jezebel / Via jezebel.com

Or perhaps you noticed this one that made the rounds a few months ago:

Latin Times / Via latintimes.com

For the record, farts do NOT cure cancer. Drinking a glass of red wine is NOT the same as getting actual exercise. And scientists definitely did not say .

The BMJ recently published a study about exaggeration in health-related science news; they found a strong association between hype in health reporting and exaggerated press releases. The study authors suggest that if university press offices and researchers were more careful about their press releases being accurate, the news articles written about their research would potentially also be more accurate. (Others pointed out that if health journalists did their jobs better, this wouldn’t be an issue either. Point well taken.)

Until that happens, though… how can you as a reader understand how to read health journalism critically, so that you know how to call B.S. on fantastical headlines or sloppy reporting? BuzzFeed Life reached out to four experts who work in medicine and/or health journalism to help answer this question.

Here are some basic pointers:

If the research wasn’t conducted on humans, the findings can’t necessarily be extrapolated to humans.

Drimafilm

Chemicals, drugs, foods, and so on can behave differently in animals than they do in people, for example. And just because something works in a lab doesn’t mean that it’ll work in the same way outside of a lab.

Ed Yong, an award-winning science journalist who writes the blog Not Exactly Rocket Science for National Geographic, gives this example: “You’ll see things like chemical X, which is found in food Y, kills cancer cells in a dish,” Yong tells BuzzFeed Life. “So that gets translated into Eat More Food Y. And that’s a bit ridiculous. Because a lot of things kill cancer cells in a dish, and food Y contains a lot of different things beyond chemical X, it also contains A, B, C, and D, which may not be very good for you.”

Correlation is not causation!

This is a big one. Lots of studies can find connections between two things — but that doesn’t mean that one of those things caused the other thing. It just means that there is an interesting association, which could be good reason to do further research to learn more.

As far as what’s behind that association — there could be any number of answers. Maybe one thing did cause the other. Or maybe something else caused both. Or maybe two unrelated things were responsible, and they only appear related but are in fact not related. Or maybe some other reason!

Here are some funny examples of how confusing correlation and causation can go very, very wrong, courtesy of Tyler Vigan, the genius behind the blog Spurious Correlations:

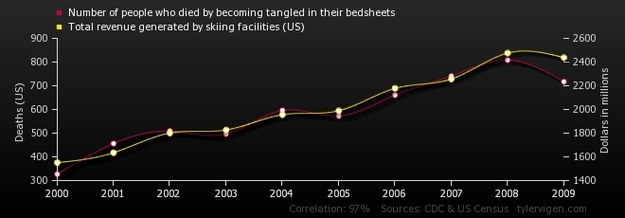

Tyler Vigen / Via tylervigen.com

More Skiing Leads to Increase in Bedsheet Entanglement Deaths: This chart shows a correlation between the number of people who die by becoming tangled in their bedsheets, and total revenue generated by U.S. skiing facilities. Clearly completely unrelated… but imagine the potential (inaccurate) headlines! Just think of the clicks!

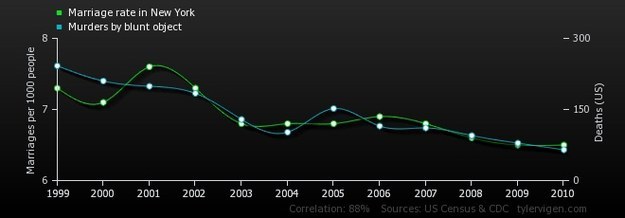

Tyler Vigan / Via tylervigen.com

Fewer New York Marriages Means Fewer People Murdered by Blunt Objects: Blunt object murders are going down, and so is the marriage rate in New York. These are totally unrelated facts, but when you put them together in a nifty little graph, it certainly can make you wonder if New York marriages are actually tragically deadly.

But it shouldn’t! Because this is a spurious correlation, not a causation!

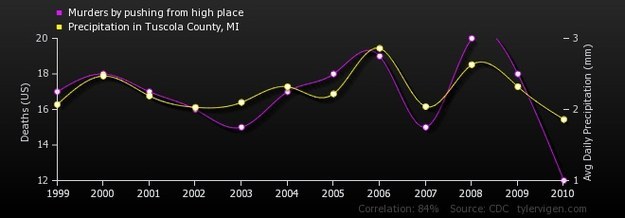

Tyler Vigan / Via tylervigen.com

Rainfall in Tuscola County, Michigan, Leads to Murders by Pushing From a High Place: The more precipitation in Tuscola County, the more cases of people being murdered by being pushed from a high place. As you can see, they follow similar patterns. SCIENCE!

Except no, actually, not science. And if you read headlines like this, you should pay extra attention to whether the journalists mention correlation and causation, and if they make claims that certain findings cause other findings, without explaining exactly how that might be. Usually there are other possible answers, and a good journalist should mention them.

Does the story address both positives and negatives?

ABC / Via twinpeaksgifs.tumblr.com

“If [a health story is] one-sided (i.e., only extols the positive), then I would view it more skeptically,” Dr. Kevin Pho told BuzzFeed Life in an email. A practicing physician and the editor of KevinMD.com, a popular healthcare blog with over 1,000 healthcare professionals as contributors, Pho emphasizes that the best health reporting demonstrates nuance, especially when it comes to new medical tests and technologies. Take cancer screening, for instance. “Sometimes, cancer screening tests are performed without evidence to back their efficacy (PSA tests for prostate cancer, for one),” Pho says. “Doing so exposes patients to medical complications, or can uncover incidental findings that necessitate more tests. Such uncritical reporting does harm to patients, who generally believe that more tests equate to better medicine.” When health reporting takes a more nuanced approach and articulates pros and cons of medical tests, “[it] will make my job easier in the exam room,” Pho says.

Along these lines: If a story talks about the accuracy of a medical test, it should also talk about the specificity of the test. What that means: “What also matters is the rate of false-positives — how often will that test tell you a person has a disease when they don’t,” Yong says. If a story only reports on accuracy but does not say anything about specificity, then you can be sure that you’re not getting all the information you need to make a fully informed judgment about how valuable the test is in real life.

The most trustworthy health articles will have multiple sources, and at least one of them shouldn’t be connected to the research in any direct way.

NBC / Via gifsforum.com

Gary Schwitzer is a former health journalist, and is currently the publisher of HealthNewsReview.org and the Health News Watchdog Blog, where he conducts research on and assesses the quality of mainstream health news reporting. He has spent decades thinking about accuracy in health journalism, and he and his colleagues at HealthNewsReview.org have come up with 10 criteria that they use to assess the legitimacy of a health news story.

Appropriate sourcing is a big one.

“If you see a story that makes a claim about some healthcare idea, and there’s only a single source, and the source is the promoter of that idea — the doctor, the researcher, the company executive, it doesn’t matter — if it’s a single-source story, it’s [health journalism] malpractice,” Schwitzer says. “If you only see one person quoted, and it’s someone promoting his or her own work — let the fireworks and the red flags go off.”

Another way to think of it: Just as you’d get a second opinion if a doc told you to amputate, the best reported health stories also get multiple opinions from a variety of experts on any given topic.

Also, those sources should be the most qualified people to talk about what they’re talking about.

An emergency room doctor isn’t the best person to talk about breast cancer screening, for instance. And an academic researcher wouldn’t necessarily know the same things that a practicing clinician does. “[W]hen reading quotes from physicians, ask whether they are actively practicing medicine, or speaking in a non-clinical capacity — such as academia or in the corporate world,” Pho said.

Good health reporting should discuss costs.

maviedefrancaiseabruxelles.tumblr.com

This is one of the 10 criteria Schwitzer’s HealthNewsReview.org uses to assess the quality of health articles. “If it’s a story that talks about a new idea in healthcare and it doesn’t discuss costs, that should be a huge red flag,” he says.

Schwitzer and his colleagues analyzed nearly 1,900 health news stories between 2006 and 2013, and they found that about 70% of the stories they looked at didn’t adequately address cost. “What kind of informed consumer population can you have when 70% of the time you’re making things look terrific, risk-free, and without a price tag?” he says.

The headline should not overstate the findings of the research.

NBC / Via youtube.com

Ignore the sensationalist headline and try to understand exactly what the research uncovered — and pay attention to where the study findings end and speculation begins. That’s where your skepticism should kick in.

“This is the approach I take when I look at studies myself [in my reporting]: Try to figure out the bare minimum you can say based on what the study has shown,” Yong says.

Yong says to imagine a study that shows that a chemical kills cancer cells in a dish in a laboratory. “What it says is we might need to do a bit more research about this… but what it doesn’t say is that the chemical will kill cancer [in people]. The best thing a skeptical reader can do is to pare that right back and to ask, What is this saying? What is the bare minimum I can take away from this? Everything else is just dressing.”

If the study was done on humans, pay attention to who those humans were: The study is stronger if they’re randomly selected, nationally representative, and if there are a LOT of them.

Regarding research done on actual humans, who were the humans that participated in the study? Were they a huge group of randomly selected, nationally representative humans, or a small group that wasn’t randomly selected at all (say, 12 post-menopausal women with sleep issues, for instance)? The more people involved in the study the better. And the more that the people in the study represent the population as a whole, the more applicable the results are to the population as a whole.

Lots of psychology research, for instance, is conducted on college students, because they’re easily accessible on college campuses (where most research is being conducted). The problem is that college students aren’t a diverse group — they don’t represent the rest of the population in terms of age, race, socioeconomic status, you name it.

So those results might be fascinating, but you have to look at them critically — they’re really just saying that college students at this point in history at a mid-size university in the Midwest have these behavior patterns. It’s not saying that all people have these behavior patterns, or that they always have and always will.

The article should discuss the findings within a greater context.

Studies aren’t done in a vacuum. The best way to look at scientific research is to think of it as an ongoing and continuous discussion among scientists, where they inspire, critique, and learn from each other, and work off each others’ preexisting work to lead to discoveries that have greater and greater ramifications far down the road.

The problem arises when journalists then report on these findings out of context, as if each individual finding were a major breakthrough in modern medicine, rather than the incremental step that it actually is. Dr. Jen Gunter, an OB/GYN and pain medicine physician who also writes for numerous publications, points to journalists writing stories about case reports as a potential source of confusion and misinformation to the public. Case reports are essentially a write-up about a single case of a patient dealing with a particular issue. “While a case report for a really rare medical condition that affects only 100 people in the United States has a lot of value [because that might be all the data you’re going to get], I wish we saw less reporting about case reports that deal with things that are more common,” Gunter says.

Another example: Take reports about blood tests for different diseases, for instance. Perhaps a group of scientists has identified a protein or a hormone that’s more common in people with a certain disease. “Maybe in the future you get a cheap and easy blood test that would allow you to detect that disease by screening for that chemical, and it all sounds very straightforward,” Yong says. “But obviously a lot of those studies come out all the time and get reported on all the time, and we aren’t inundated with all sorts of cheap and easy-to-use blood tests.”

In other words: Take a step back and think of the reality of the situation. And look to see if the story mentions any sort of timeline for the blood test, rather than just reporting that a blood test might one day exist.

If the story sounds too good to be true, it probably is.

“All good results in medicine always come with hard work, so if it sounds too good to be true, get another opinion just to be sure,” Gunter says. Fair enough.

Maybe you could do a little more reading about this, if you want.

FOX / Via gifbay.com

These tips I’ve covered above are helpful, but there are many other things that you can learn about how to tell if scientific research is sound, and if the reporting on it is legitimate. Have a look at Health News Review’s list of 10 criteria for journalists writing about health and science. And then check out this fantastic primer from Vox: Why so many of the health articles you read are junk, as well as this helpful and incredibly detailed deck of cards: How can you tell if scientific evidence is strong or weak?

And one last thing!

This post has me thinking a lot about glass houses and stone-throwing. In my years of reporting on health-related topics, I have definitely been guilty of writing over-hyped headlines and stories with varying degrees of inaccuracies or incompleteness. There are plenty of reasons for this — quick turnaround deadlines, inability to access sufficient sources in a short amount of time, an actual business need to drive clicks all come to mind. That’s one of the reasons I wanted to write this post. In my new role as health editor at BuzzFeed, my aim is to be as accurate in my reporting as possible. The BMJ study, and reporting out this story, have both made me think a lot about how I can do better in the future.