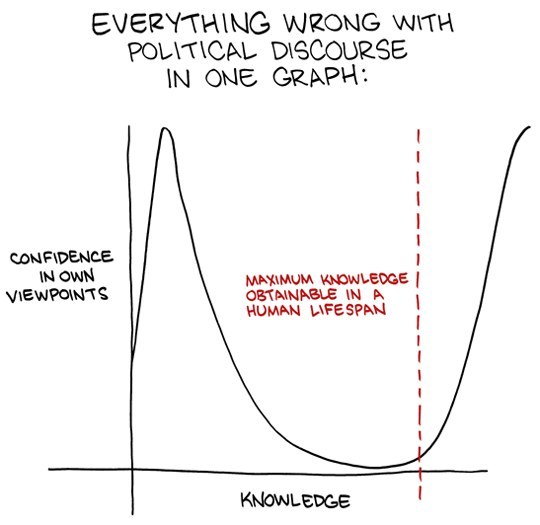

You think your brain is your friend.

It makes you think you’re really good at something when you’re humiliatingly bad at it.

SMBC / Via smbc-comics.com

In a kind universe, you’d know when you were bad at something, so you could avoid doing it. But that’s not how it is. In 1999, two psychologists – David Dunning and his student Justin Kruger – published a paper which showed that the opposite is true: People who are really bad at something tend to think they’re really good. The most competent people underestimate their abilities; the rest of us overestimate them, and the worse we are, the more we overestimate them by.

“In many areas of life, incompetent people do not recognise – scratch that, cannot recognise – just how incompetent they are,” wrote Dunning. The reason is that you need some skill at something to know how good at it you are. It’s impossible to tell if you suck if you’re too bad to know what “sucking” involves. This explains an awful lot of X Factor entrants, pub karaoke singers, and budding stand-up comedians.

It makes you feel like a fraud.

Chainsawsuit / Kris Straub / Via chainsawsuit.com

You know that feeling that you’re making everything up as you go along, that you’re an incompetent charlatan, and that everyone else will eventually find you out? You know. Don’t you? Please tell me that you do. It can’t just be me.

And of course it isn’t. “Impostor syndrome” was first noted in 1978 by two Georgia State University researchers, Pauline Rose Clance and Suzanne Imes. They noticed that lots of high-achieving women they interviewed believed themselves to be frauds, saying things like “I’m not good enough to be on the faculty here [at the university where they were a professor]. Some mistake was made in the selection process,” and “Obviously I’m in this position because my abilities have been overestimated.” However much these people achieved, however great their objective successes were, they couldn’t shake the feeling that it was all based on a lie, or a mistake.

Clance and Imes initially believed that it was more common in women than in men. But later research suggests that high achievers of both sexes suffer equally. It is hard to pin down exactly how common it is, but at least one professor of engineering found that when he told his straight-A students about it, they all immediately empathised.

Even Albert Einstein seems to have had some sort of feelings of this nature: He wrote to Queen Elisabeth of Belgium that “the exaggerated esteem in which my lifework is held makes me very ill at ease. I feel compelled to think of myself as an involuntary swindler.” And you’re probably not as good at your job as Einstein was at his.

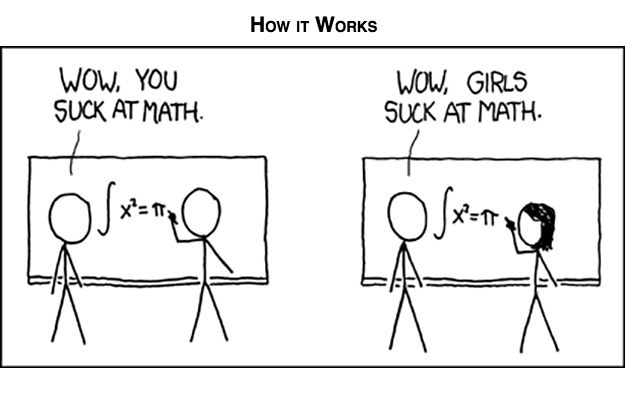

If people think you’re stupid, it makes you stupid.

XKCD / Via xkcd.com

There are people out there who think that blondes are stupid. There are people who think that women can’t do maths, or that black people aren’t as intelligent as white people.

It would be nice to be able to ignore them. But unfortunately, it’s not as simple as that. There is a phenomenon known as “stereotype threat”, and what it means is that if you are reminded of a negative stereotype about your group, you’ll be more likely to conform to it. So black people will do worse at a test if they are told that it’s an intelligence test (see graph), because of the stereotype that black people are less intelligent; female chess players will do worse if they’re told their opponent is male than if they aren’t told anything, because of the stereotype that men are better than women at chess; and older people will do worse at tests on memory and cognition if they’re told that it’s a test of “ageing and memory”.

This matters, and is more common than you may think: The website Reducing Stereotype Threat reports that the effect of stereotype threat has been recorded in the athletic performance of women and white people, women’s negotiation skills, gay men’s childcare abilities, and women in driving. Those suffering from it are more likely to blame their failures on their own inability rather than external obstacles. And, of course, it becomes a self-fulfilling prophecy: If a member of a group performs badly at a task they are widely believed to be bad at, it reinforces the stereotype, and makes stereotype threat more of a problem.

Luckily it can be reduced, by – among other things – providing role models and reducing the emphasis on negative stereotypes. Which is a good reason for getting people from more diverse backgrounds on to TV, into parliament, and into top jobs.

It insists you’re right even when you’re not.

SMBC / Via smbc-comics.com

Do you ever find that other people – not you, obviously – are amazingly bad at realising that they’re wrong? You show them all the evidence, but they insist that it’s all lies and stick doggedly to their guns, waving some flimsy thing they’ve found on the internet.

Sadly, though, that’s how humans work. It’s called “confirmation bias”. We tend to discount any evidence, however rigorous, if it undermines our beliefs, while grasping hungrily at anything which supports them, even if they’re obviously spurious.

“We humans are extraordinarily good at finding justifications for whatever conclusion we want to reach,” says the psychologist Jonathan Haidt. “We can spot a supporting justification at 200 metres hiding in a tree.”

So if you believe that, say, Barack Obama wasn’t born in the United States, or that mankind didn’t go to the moon, you will find evidence that supports it, and ignore anything which doesn’t. More prosaically, it’s what makes the conversations between liberals and conservatives, or atheists and the religious, so soul-sappingly pointless: Each side thinks the other one’s “evidence” is meaningless.

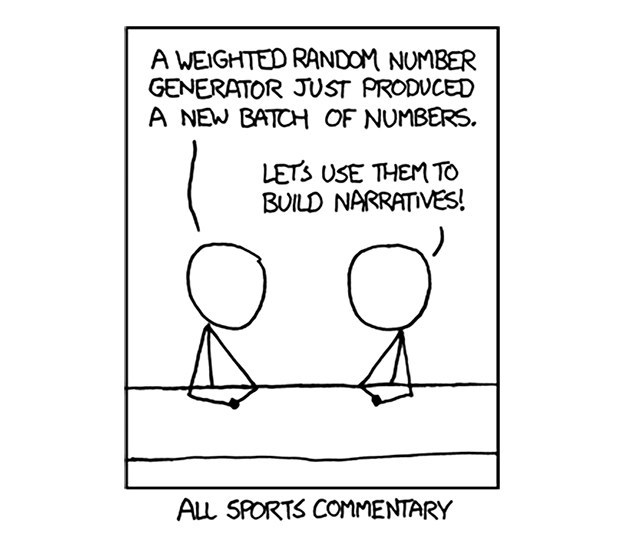

It makes you think stuff happens for a reason.

XKCD / Via xkcd.com

Human beings are incredibly keen to see patterns everywhere, to the extent that they see patterns where there are no patterns at all. That’s why you hear someone calling your name in the white noise in the shower, or you see a face in the folds of the pillow in the dark, or animals in the clouds. You can understand why this might have been useful for our ancestors – a “false positive”, where you see a sabre-toothed cat in the weeds but there isn’t really one, might be annoying, but a “false negative”, where you don’t see a sabre-toothed cat in the weeds but there actually is one and it eats you, would be more of a problem.

We see patterns in random data as well. We want to see causes, not just the workings of chance. Again, that could have been helpful to our ancestors: If three people get cholera around the water hole, it might be a fluke, but you might not want to drink from that water hole anyway, to be on the safe side. The Nobel Prize-winning psychologist Daniel Kahneman calls this “belief in the law of small numbers”: the conviction that we can extrapolate from small samples to the wider world. It’s also known as the “representative heuristic”.

The trouble is when it leads us to make bad decisions. In the Second World War, says Kahneman in his book Thinking, Fast and Slow, Londoners noticed that parts of their city hadn’t been hit by V2 bombs – so they decided that the Germans had spies in those areas whom they were trying to keep safe. People avoided the areas where the bombs had previously landed. But an analysis of the strikes showed that they were landing exactly as you’d expect if they were completely random.

We don’t need to worry so much about V2 bombs these days, but we still have the same problem. People who see a pattern to how often certain numbers come up in the lottery, and sportspeople who always play in the same lucky socks after they scored in them the first time, are falling victim to the belief in the law of small numbers.

It makes you scared of things that will never happen.

SMBC / Via smbc-comics.com

If you live in the UK, your odds of dying in a terrorist attack are roughly equivalent to your odds of dying in an asteroid strike: millions to one against. (Precisely how many millions depends on who you’re asking, and how they’ve defined “terrorism”, but I like the spuriously precise 1 in 9.3 million given here. Slightly more likely than dying in an avalanche, apparently.)

Similarly, you are, statistically speaking, simply not going to die in an aeroplane crash. You are not going to be eaten by a shark. And you’re almost certainly not going to get murdered.

And yet we are all much more scared of those vanishingly unlikely things than we are of the stuff that probably will kill us – diabetes, heart disease; among the young, traffic accidents. This is because of something called the “availability heuristic”. It’s a boring name, but an important concept. Daniel Kahneman (again) and Amos Tversky described it in the 1960s. The idea is that when we’re asked to think of how likely something is, we don’t think about the statistical probabilities, but ask ourselves a much simpler question: “How easily can I think of an example?”

And, of course, dramatic things – things like terrorism and plane crashes – are much more memorable than slow, uncinematic risks like diabetes. What’s more, they’re much more likely to get on the news, so we see examples from around the world, all the time. This leads to some terrible decisions: For instance, the fear of flying and terror after 9/11 led millions of Americans to drive instead of flying, meaning that roughly 1,500 more people died than usual in the year after the attacks.